The Death Of The Commercial Database: Oracle's Dilemma

Contributing Authors: Fred McClimans & Zach Mitchell.

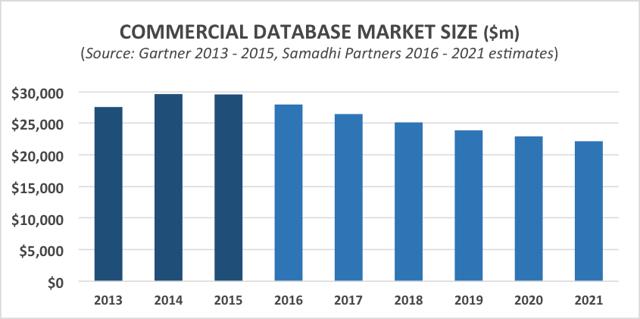

We see the $29.6b commercial database market contracting 20-30% by 2021, and do not believe Oracle (NYSE:ORCL) can transition its revenue streams (from legacy commercial database to cloud-based subscription offerings) fast enough to offset the decline of this market, which represents a major legacy core of its revenue.

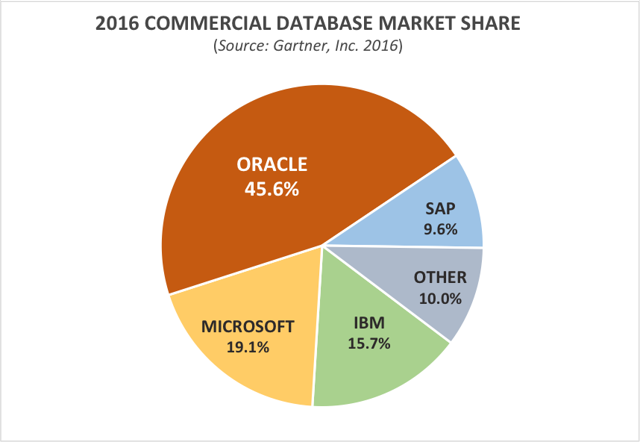

The commercial database market - 80% of which is an oligopoly of Oracle, IBM (NYSE:IBM), and Microsoft (NASDAQ:MSFT) - has remained one of the most stable and sticky in all of tech for over two decades. However, we think the velocity and magnitude of its decline is likely to surprise many investors.

The deflationary pressures that have already hit the database market are just getting started, including:

- Migration to SaaS, where most offerings use free open-source databases;

- faster growth in use cases such as social media, IoT, and unstructured/semi-structured data that are ill-suited to the SQL standard upon which the database oligopoly is based;

- the increasing stability and functionality of a variety of free open-source options, most of which are "Not Only SQL" (NoSQL) and are therefore much better suited to the aforementioned use cases; and

- the disproportionately large benefits that NoSQL databases continue to realize as a result of Moore's Law-driven improvements in processors, memory, solid-state storage, and network throughput (these improvements increase NoSQL's ability to handle both NoSQL and SQL use cases on-the-fly and progressively marginalize SQL-only databases just as SQL-only databases marginalized mainframe-based databases during the late '80s and '90s).

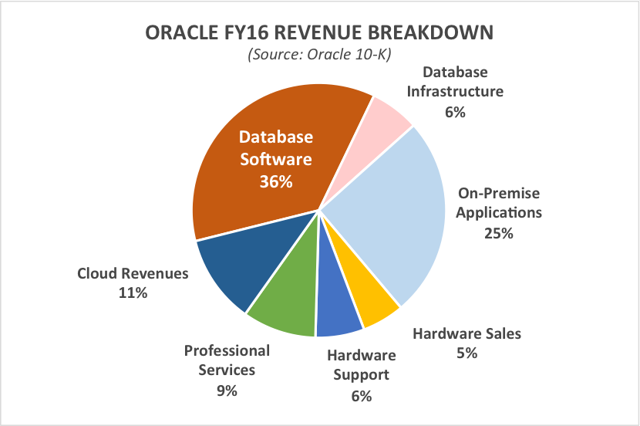

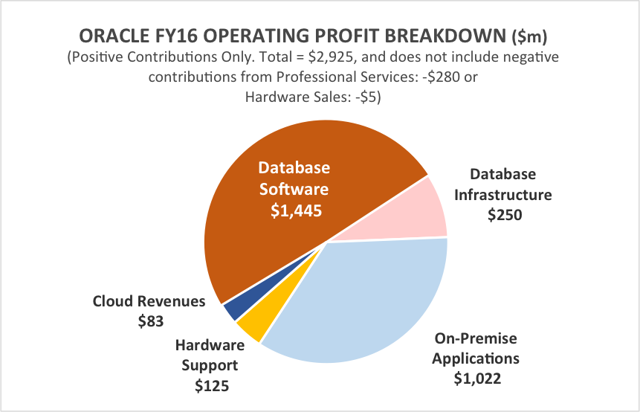

Revenue from database software represented ~36% of Oracle's total FY16 revenue and ~55% of its operating profit.

Note: Database Software and On-Premise Applications include both licenses and maintenance revenue.

The company's decline in projected database revenue is distinct from and in addition to the decline in its on-premises application revenue, which accounted for ~25% of total FY16 revenue and ~39% of operating profit.

Microsoft and IBM will be hurt only marginally, as database software generates just ~5% of revenue for each.

Without aggressive action to significantly increase non-database revenue, we do not believe Oracle can offset the oncoming decline in commercial database revenue fast enough to maintain its present valuation.

Specifically, we believe Oracle needs an even more radical and rapid organizational and cultural shift toward "cloud-first" that incentivizes both its sales force and its customers to move aggressively into the cloud. The company also needs to consolidate its various home-grown and acquired cloud/SaaS offerings toward a single platform in order to generate operational leverage down the road. Both of these initiatives will be painful in several ways, especially financially, for a few years to come. To partially offset the pain, Oracle should divest its remaining hardware and other non-core businesses.

Source: Morningstar

Theme Explanation

For those that are interested in a more technical dive, below is a deeper, historical explanation of the five interrelated trends that are gaining momentum, and that will, in combination, cause the commercial-database market to contract 20% to 30% by 2021:

1. The Continued Enterprise Migration to SaaS/Cloud. Except for a few of the first SaaS offerings that were built on top of an Oracle database, namely Salesforce.com (NYSE:CRM), NetSuite (NYSE:N), and Oracle itself, it is difficult to find a SaaS provider that uses any commercial database. For companies founded after 2005, that number is virtually zero.

The vast majority of SaaS providers today either use an open-source database, or, as is the case with SaaS HCM vendor Workday (NYSE:WDAY), develop their own. Every user of an on-premises enterprise application, including one of the five core client-server application categories: ERP (Enterprise Resource Planning), CRM (Customer Relationship Management), HCM (Human Capital Management), SCM (Supply Chain Management), and BI (Business Intelligence) applications, which moves to SaaS therefore eliminates a commercial database seat, and with it, the maintenance/support and future upgrade revenue it would have generated. Even an enterprise seat that moves to Salesforce.com will generate far less revenue for Oracle than that seat did when it was deployed on-premises.

Hence, we believe the enterprise migration to SaaS/cloud is highly deflationary for commercial-database revenue. Further, we are still early in the migration from client-server to SaaS/cloud. Depending on which research one believes, this migration is only 10% to 25% complete and is only just beginning to impact more mission-critical applications - such as ERP - that are more likely to be running on higher-end SQL databases (including those from Oracle and IBM, and to a lesser extent, Microsoft).

2. Commercial SQL Databases are Not Well-Suited to Handle the Most Compelling Emerging Use Cases. After SQL was standardized in 1987, it went unchallenged for over 20 years in defining how data was organized, searched, and sorted. However, in the mid-2000s, colossal tech companies began running into the limitations of SQL databases in handling both the sheer volume and varying structure (or lack thereof) of the data that they wanted to preserve, navigate, analyze, and serve up on-demand to their users/customers.

Amazon (NASDAQ:AMZN), Google (NASDAQ:GOOG) (NASDAQ:GOOGL), LinkedIn (NYSE:LNKD), and Facebook (NASDAQ:FB) each solved its own scaling problems by developing and implementing its own database software that broke with the constraints of the SQL standard.

What's more, each company released open-source versions of its databases shortly after. Dozens of new open-source databases were consequently produced in 2008 and 2009, leading to what Guy Harrison - the author of Next Generation Databases - termed "a sort of Cambrian explosion." As a result of their departure from SQL, these databases all fell under the term "NoSQL" - even though most were as different from each other as they were from SQL.

Given the exponential growth in the volume and variety of data - driven by richer social media content, more social media users, and an explosion of autonomously captured IoT data - and given the increasing desire for analysis on captured data, we believe the number of use cases suited to NoSQL will soon far exceed those suited to SQL.

The rise of these new use cases will drive greater adoption by large enterprises of open-source NoSQL solutions at the expense of commercial SQL databases.

3. NoSQL Databases and Schema-on-Read Processes Are on the Right Side of Moore's Law; SQL and Schema-on-Write Are on the Wrong Side. In general, NoSQL databases are substantially more processor, memory, and storage intensive than SQL databases, even after considering the fact that they relax many of the constraints that SQL places on how data is organized. Though NoSQL database architectures existed well before 2005 - at least, in theory - there was simply not enough processing power, memory capacity, and storage space for them to be put to practical use outside of academia.

During the comparatively processor/memory/bandwidth-starved 1980s and 1990s, adherence to the more-rigid SQL standard was effectively compulsory to ensure the performance and reliability that enterprises needed in order to migrate their applications, especially those more mission-critical applications, from mainframes and minis to the more-distributed and network-dependent client-server architecture.

The price paid for SQL's acceptable performance and reliability was driven by:

- The overall inflexibility of the data structure, called a schema;

- the onerous requirement to define that structure prior to the deployment of the database and its associated applications;

- the difficulty in changing that structure over time in order to capture different kinds of data, and to better reflect changes in the structure of the data and in the organization of the enterprise; and

- the requirement for a schema-on-write process, which fits data into the schema as it is inputted, as opposed to schema-on-read, which is simply dumping data into a big "container" and organizing it later into a schema.

Over time, however, Moore's Law-driven improvements in processing power, memory capacity and speed, storage capacity and speed, and network throughput have made the rigidity of the SQL standard and the requirement for schema-on-write processes increasingly less necessary and will continue to do so. Hence, with every Moore's Law cycle, SQL and its resource-efficient schema-on-write process loses its competitive advantage, while NoSQL, with its relatively resource-inefficient but much more flexible schema-on-write process, becomes increasingly free from the constraints that prevented it from being adopted in the first place.

4. In-Mem Possibilities and the Elimination of HDD Compromises. When SQL was standardized, hard disk drives (HDDs) were the only cost-effective storage medium that could be accessed in real time. Therefore, much of the fundamental code written into SQL database software is designed to accommodate the shortcomings of HDDs, such as the slow speed with which they can read and transmit requested data into memory and their relatively higher failure rates - at least, compared to the major solid state components of a system such as the CPU, memory, and network throughput. Now that SSDs are rapidly becoming a cost-effective replacement for HDDs, much of the design and certainly much of the code of legacy SQL database software that made such compromises in order to accommodate comparatively much slower HDDs is now rendered unnecessary.

In contrast, many NoSQL databases are written so as to take maximum advantage of SSD storage and will likely be updated to take advantage of even faster-emerging non-volatile memory technologies such as Intel (NASDAQ:INTC)/Micron's (NASDAQ:MU) 3D xPoint, which is just now becoming commercially available. We believe that, given the strong requirement to maintain compliance with the SQL standard and maintain their own backward compatibility, SQL database vendors will not be able to optimize their code for SSDs nearly as fast as many of the open source projects will be able to, putting them further at a competitive disadvantage - a classic example of Clayton Christensen's "The innovator's dilemma".

5. Increasing Availability of "SQL Methadone" Solutions for Weaning Enterprises Off Expensive Commercial Databases. We are seeing an increasing number of software tools and services designed to help enterprises migrate from commercial SQL databases. Even the most mission-critical applications running on an Oracle or IBM database can be increasingly surrounded, "quarantined", and dismantled over time, much like what happened to many of the legacy mainframe applications during the shift to the client-server architecture in the 1990s.

While SQL databases from the likes of Oracle, IBM, and MSFT will survive in some enterprises for many more years - again, much like mainframes - they will be increasingly marginalized and cost-reduced as much as possible. We see many of these tools maturing already within the Hadoop ecosystem, which already has multiple ways to integrate with SQL databases and/or put SQL interfaces and querying ability on NoSQL databases. In our opinion, this is a very similar development to the early-1990s emergence of multiple ways to integrate mainframe applications with PCs and client-server applications.

To subscribe to the Samadhi Brief newsletter, please visit SamadhiPartners.com. An overview of our research process and explanation of the Samadhi Index can be found more