AI That Works For Workers: Survey Results

Image Source: Unsplash

AI and Workers

AI dominates the headlines with predictable extremes. Some days it’s market panic over Sam Altman calling everything a “bubble”2. Other days it’s horrific and morbid stories ending in death. The coverage lurches between utopian and dystopian framings - AI will revolutionize everything or destroy everything.

AI is at least four different transformations happening simultaneously - in how we work, how we invest, how we power our world, and how nations compete. We're trying to integrate an unpredictable technology into systems that weren't designed for it. What’s happening in this messy middle?

I wanted to know how people felt about AI. What do workers think about working alongside these new systems? So I asked them directly.

About 1,200 people responded to the survey in 24 hours. It was entirely anonymous, so I didn’t collect any demographic data, which is a limitation. The entire goal here is exploratory research - this is more meant to be of a preliminary exploration of how people are thinking during this time of immense change than to produce declarative conclusions.

The Labor Market

The labor market is already feeling the impacts of AI.

-

Academic research on AI's labor impact keeps pointing to the same uncomfortable truth - disruption is already here.

-

A recent paper titled Canaries in the Coal Mine found a 13% drop in employment for young workers in AI-exposed jobs. These are young people losing work today while others their age make millions building the systems that displaced them.

-

The papers Working with AI and Voice AI in Firms explore how AI is taking over workplaces, and what tasks it might best be suited to.

There have been studies (specifically one out of MIT) noting that 95% of AI trials don’t really work… but it was about choices, not the technology. It’s still early innings.

The Market Narratives

The market thinks it’s going to work. Two things that the stock market cares about most are (1) rate cuts and (2) Nvidia. Nvidia is forecasting decelerating growth after two absolute ripper years. It could be a sign of what is to come for the AI industry broadly - a slowing.

-

Sam Altman himself has described the current AI market as a bubble (the honesty is refreshing) acknowledging froth even as his own company drives it.

-

He also claims that OpenAI will need to spend “trillions” on data centers to sustain ChatGPT’s growth.

-

Frothy valuations + trillion-dollar capital needs = volatility and so investors bounce between between treating AI as a general-purpose utility (especially because OpenAI’s backup plan seems to be selling infrastructure services) and dismissing it as hype (the company still doesn’t make money).

Both markets - the stock market and the labor market - reflect a deep uncertainty about whether AI is a genuine productivity revolution or just pure speculative fervor.

Infrastructure

But maybe it’s different this time? And part of the reason is because of the infrastructure build out. Data centers are the economy. It’s not like the Internet Bubble, where Pets.com disappears into a cloud of vapor and dreams overnight - there’s cement and land involved here.

-

When AI companies struggle, they leave behind $7 trillion worth of data centers that still need electricity and maintenance, as McKinsey’s “The Cost of Compute” points out.

-

Goldman Sachs’ “The AI Power Bottleneck” emphasizes a different bottleneck: electricity. AI datacenters could drive 100 GW of new global power demand by 2030, equivalent to 75 million American homes (!). That’s a huge physical commitment.

Companies building trillion-dollar data centers can't afford to wait for thoughtful AI integration strategies. They need utilization rates that justify the capital expenditure, which means pushing AI tools into workflows before anyone really understands the implications. The workers in my survey kept talking about “rushed implementation” and “overzealous corporate people” forcing AI into areas where it wasn't ready. The concrete creates its own logic.

Also, China is moving quickly.

Geopolitics

Sam Altman has warned that US export controls on semiconductors may not effectively constrain Chinese AI progress, since China can pretty much work around anything at this point. DeepSeek's recent models prove his point. Chinese labs are achieving competitive performance with less advanced hardware by optimizing algorithms instead of throwing more compute at the problem.

This creates an acceleration trap. The external threat justifies rapid domestic AI adoption, but that acceleration translates into exactly the kind of chaotic workplace integration that workers are worried about. The same competitive pressures that make AI a national priority are the ones that make its deployment at the workplace feel haphazard and worker-hostile.

Why This Matters for Workers

Each of these domains creates pressure that ultimately lands on individual workers trying to figure out their relationship with AI systems. The labor market research suggests disruption is already happening. The market needs to boom, probably. The infrastructure investments create pressure for rapid deployment. The geopolitical competition adds urgency that makes careful implementation feel like a luxury we can't afford.

But here's what all this macro analysis misses: how do actual people navigate these pressures? What do they want? What are they worried about? How are they making sense of working alongside intelligence that's powerful but unpredictable3?

So… how do people feel about it?

A Survey About Making AI Work for Workers

I asked 11 questions4, with multiple chances for fill-in the blank responses:

-

How familiar are you with AI tools in your industry?

-

Have you used AI at work in the past 6 months?

-

What’s the biggest benefit you hope AI could bring to your work?

-

What’s your biggest concern about AI at work?

-

What role should workers have in decisions about how AI is implemented?

-

How much do you trust your employer or organization to use AI in ways that benefit workers?

-

Has your employer provided training on how to use AI tools?

-

If you could enact one policy to ensure AI benefits and complements workers, what would it be?

-

What industry or type of work are you in?

-

Are you a member of a union or worker association?

-

Any additional thoughts

Their responses largely showed a workforce that's not totally enthusiastic nor completely resistant - but they are actively thinking through what it means to work alongside intelligence that isn't quite human but isn't really predictable either (the hallucinations, for example5).

And just to be clear, there is a personal side to all of this - a lot of people are using the technology for a form of anthropomorphic bonding and chatbot codependency6. OpenAI had to rollback the sycophancy - the model was too agreeable. People got mad at them, as the New York Times reported:

ChatGPT was “hitting a new high of daily users every day,” and physicists and biologists are praising GPT-57 for helping them do their work, Mr. Altman said on Thursday. “And then you have people that are like: ‘You took away my friend. This is evil. You are evil. I need it back.’”

Our relationship with AI is complicated. I am not going to focus on the more personal side of AI in this piece. The labs want it to be this huge, intelligent, math champion thing that takes over life and work, but most people just want a buddy that they can ask something like “whre shld I buy new dog food for dog puppy” or “help me analyze this dataset pls”.

In this tense in-between time, we have to know what people are thinking about it.

Executive Summary

-

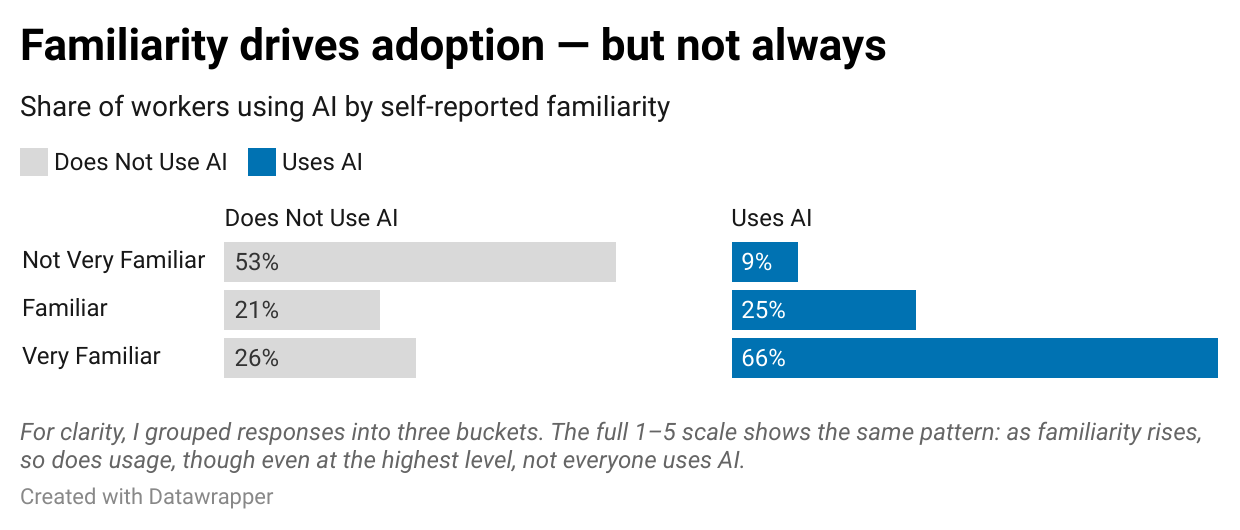

Familiarity vs usage: 85% are familiar, but even among the very familiar, more than a quarter don’t use AI at all.

-

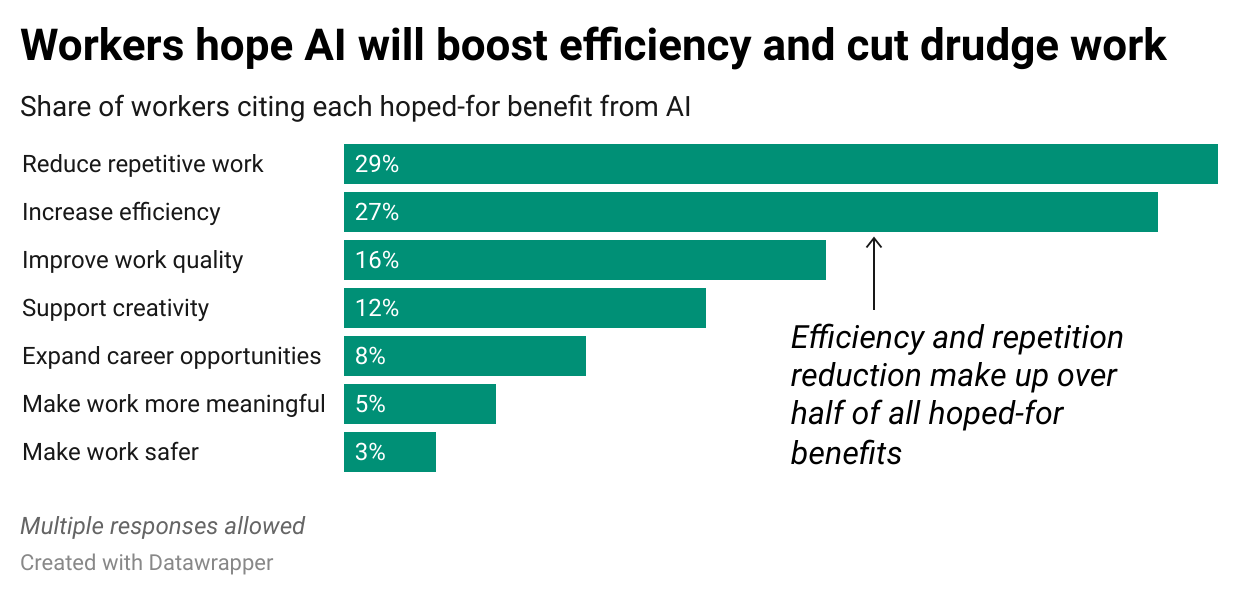

Hopes: Workers want AI to take over the boring parts of their jobs. The top hoped-for benefits were reducing repetitive work and increasing efficiency.

-

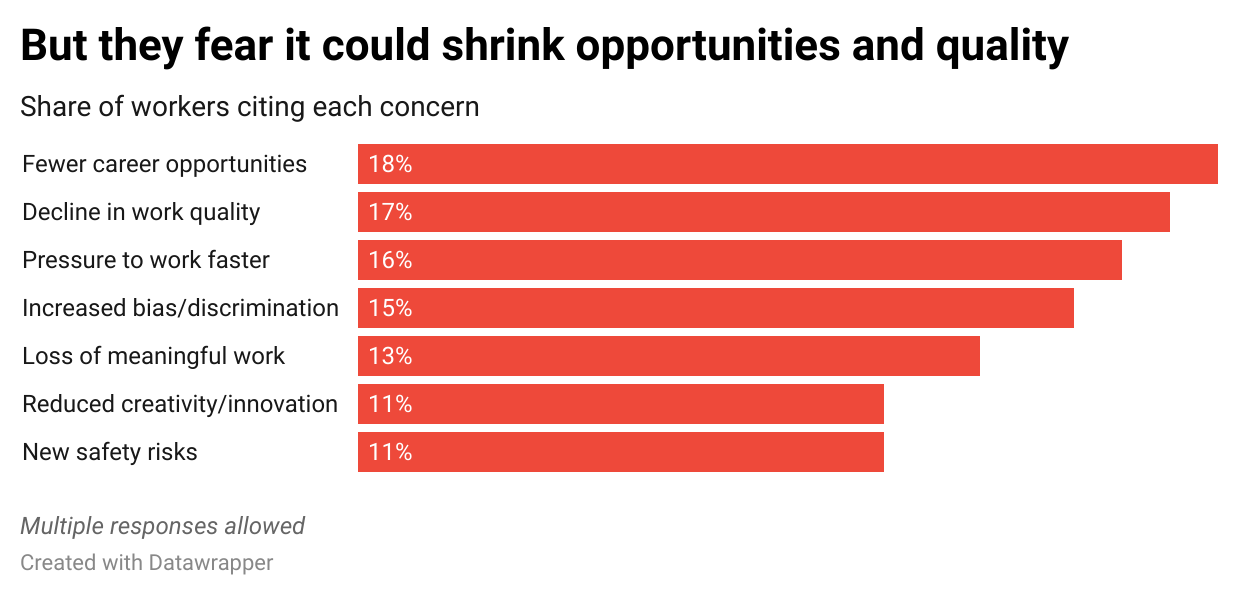

Concerns: Workers are less worried about total replacement, and more worried about erosion of the good parts of their jobs.

-

Industry differences: Concerns reflect professional identity: healthcare workers fear accuracy errors, teachers fear loss of connection, and tech workers fear diminished creativity.

-

Decision-making: 62% want shared decision-making in how AI is implemented at work, and 15% want full authority. Fewer than 2% are comfortable with no input.

-

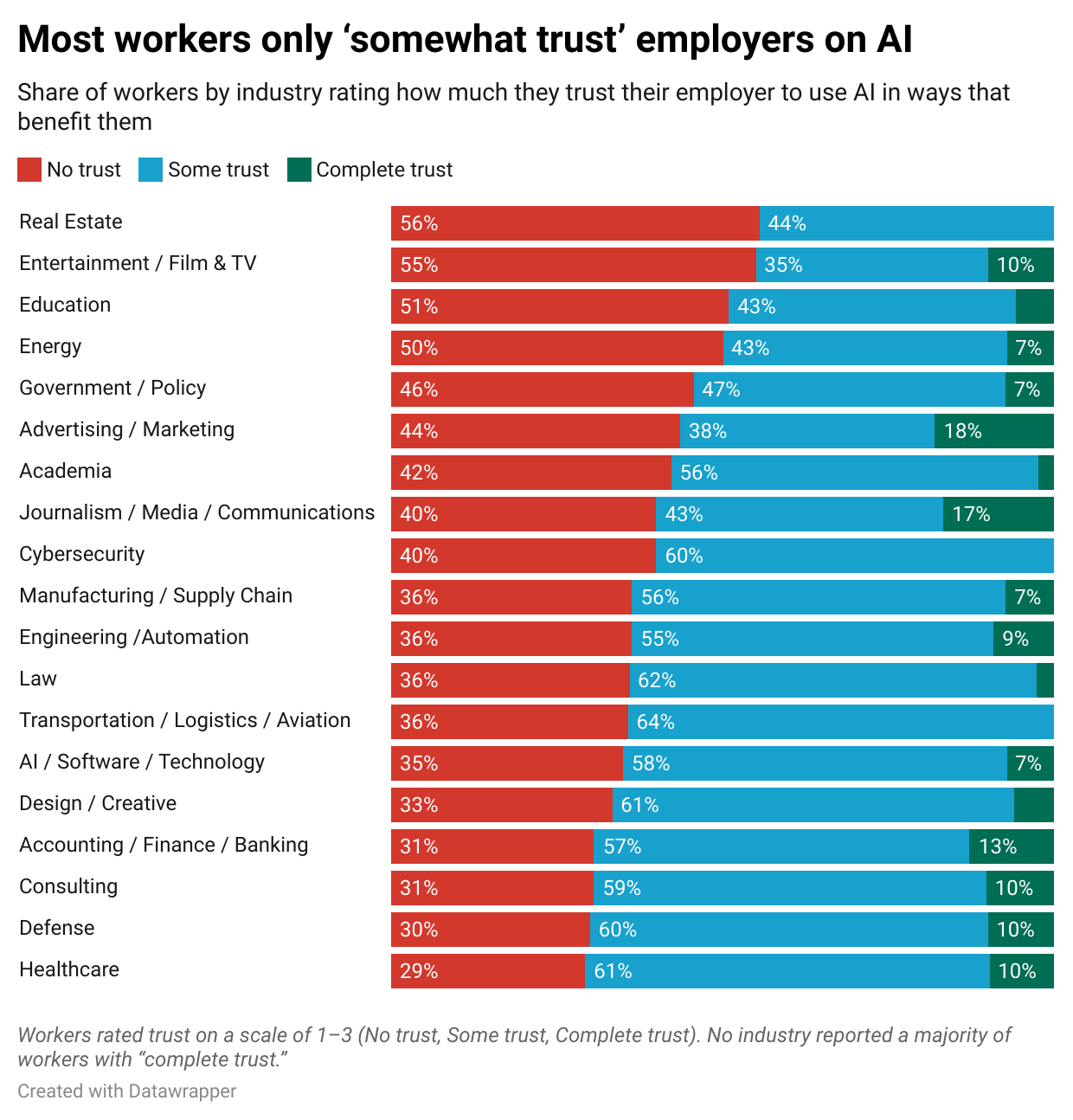

Trust paradox: Most people “somewhat trust” their employers on AI. But in many industries, a majority report no trust. No industry reached a majority “complete trust.”

-

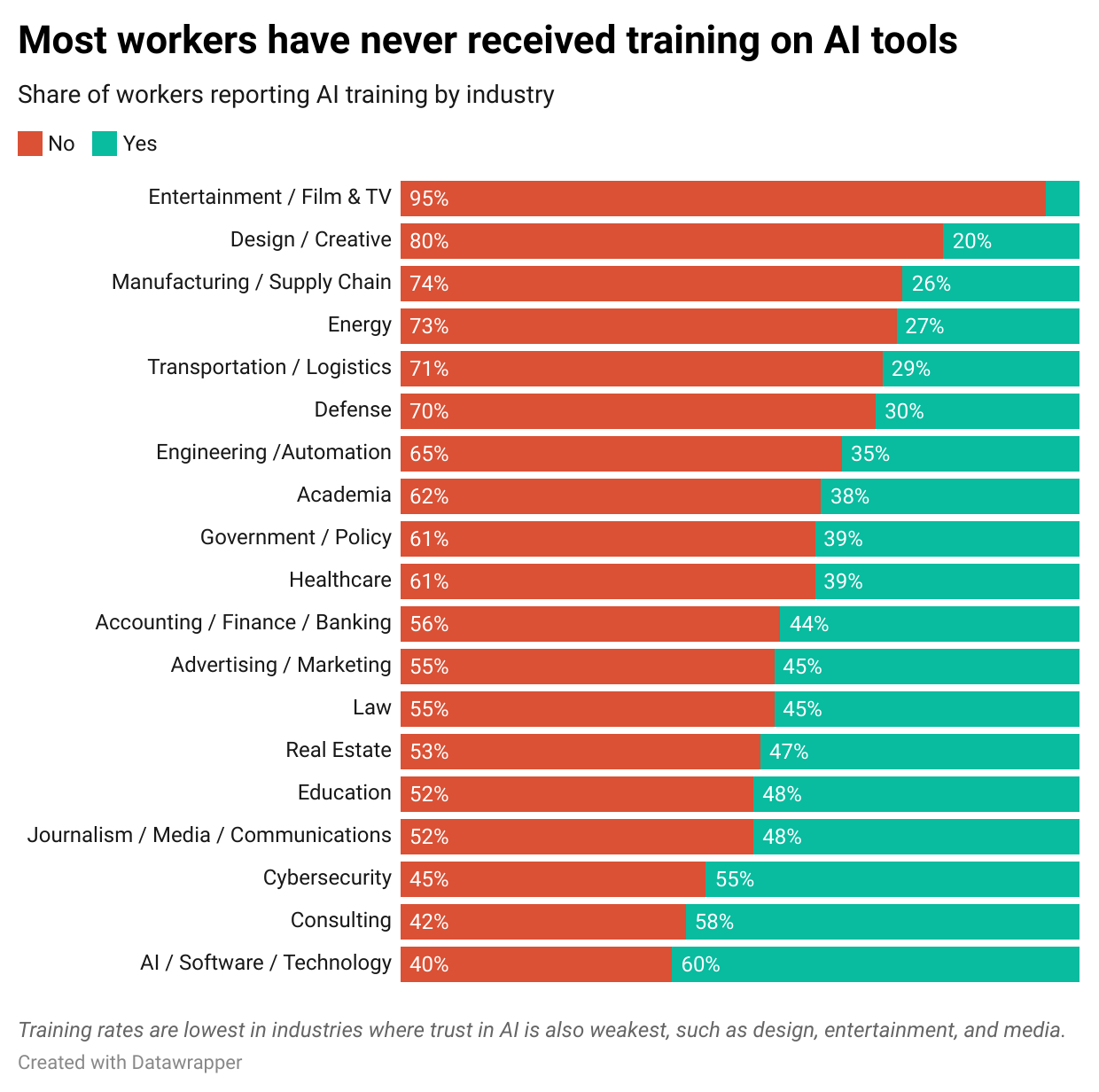

Training gap: Only about 60% of respondents have received training, with especially low rates in creative industries (20%) and entertainment (5%).

-

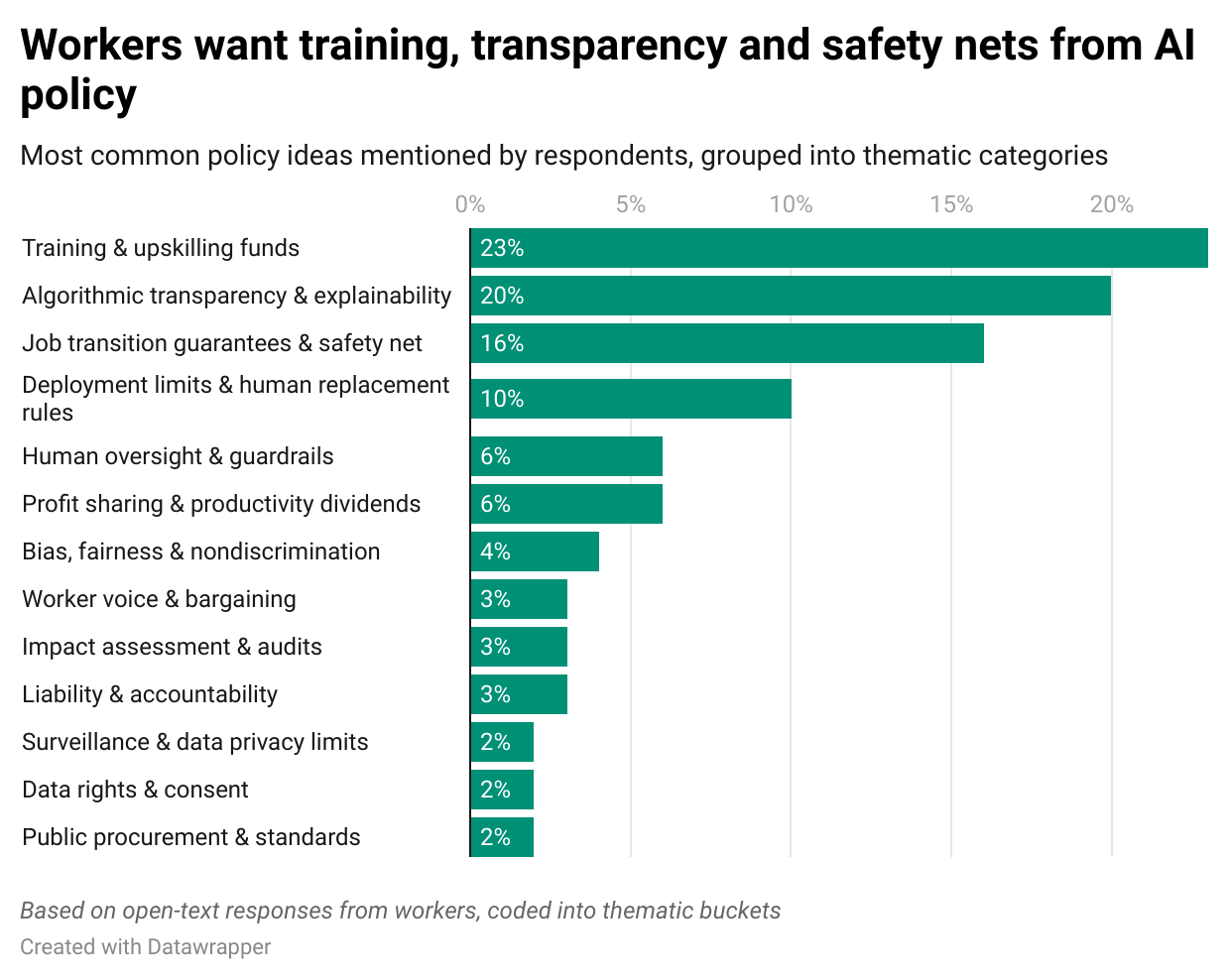

Policy demands: The most common requests were: training/upskilling funds, algorithmic transparency, and safety nets for displaced workers.

Questions 1 and 2: Familiarity and Usage

Most respondents who use AI said that they were at least “familiar” with AI tools (ranking 3 or above). And familiarity clearly drives some element of adoption: just 9% of workers who say they’re “not very familiar” use AI at work, compared to two-thirds of those who report being “very familiar.”

But the relationship isn’t perfect. There are an interesting bunch of people - the 26% who are “very familiar” with AI tools who don’t use it. These are people who know exactly what these systems are capable of and have decided, maybe, deliberately, not to integrate them into their workflows.

Questions 3 and 4: Benefits and Concerns

When asked what they hoped AI could do for them, workers overwhelmingly pointed to the perhaps boring parts of their jobs: reducing repetitive tasks and making work more efficient. The main hope that people have for the technology is that AI becomes a productivity tool that frees up time and energy for more meaningful work. Below are some quotes from the survey:

-

If it can take away the paperwork, that’s a huge win. - Healthcare worker

-

AI should be doing the boring parts so I can spend more time on the thinking parts - Tech worker

(Click on image to enlarge)

But those same workers are also worried about fewer career opportunities and a decline in work quality. In other words: even as they want AI to take over the drudge work, they fear it could take away the parts of their work that matter most for advancement and identity.

-

“My worry is that overzealous corporate people looking to reduce headcount will force it into areas it’s not good enough at yet, and it causes major problems.” - Automotive engineer

-

“Hospitality is already stretched thin. If they throw AI into scheduling or customer service, I’m worried it’ll just mean more stress for us when the tech doesn’t work right.” — Hospitality worker

(Click on image to enlarge)

Question 9: Industry Analysis

Breaking down the concerns and benefits by industry reveals even more. Most industries, like tech and finance and consulting, are most excited abut reducing repetitive work and increased efficiency and most concerned most about job loss.

But the concerns vary broadly - academia and healthcare are most concerned with a decline in accuracy. Design/creative roles are worried about creativity loss. Government workers are most concerned with bias.

Healthcare workers specifically are drawn to efficiency gains, but are very worried about accuracy, liability, and patient safety:

-

“We have the option of using an AI scribe software that can also enter orders. Some providers love it and say they can see a lot more patients.” - Cardiology worker

-

“AI in medical coding could save significant time, but I would not trust it on anything but simple cases. There is too much $ at stake to risk a denial on medium or high complexity case to allow AI to do medical coding unsupervised.” - Healthcare worker

-

“Other things will have to change to allow for more AI like legal framework for handling malpractice in medicine for example. Is it on the physician to have quality AI tools or the hospital?” - Healthcare worker

Education is more worried about losing meaningful connection than about efficiency:

-

“There’s talk at my school of teachers being forced to use AI to give feedback to students because, for years, we’ve complained about not having enough time to do our jobs. Instead of acknowledging the validity of that condition we’ll now be mandated to not use our skills as we wish…” - Education worker

-

“AI in education is swiftly becoming an ouroboros. Students write papers with AI that teachers grade with AI or use AI to detect itself… The results are facile, simplistic and passable if you don’t really care about what you’re teaching. The overworked, underpaid teacher is tempted, but for me all the AI usage in education falls short of the goal of humanistic education.” - Education worker

Tech workers are conflicted - AI boosts efficiency (that is indeed the main selling point) but it also creates existential worries about skill relevance and creativity.

-

“Use AI every day to code and my team is older and doesn’t understand truly how helpful it is. I work probably 15 hours on a 40-hour schedule. AI is the best thing to happen to my career but I now am kinda stuck because I don’t know my next career move. I am a developer but I wouldn’t pass a technical interview without AI, but that shouldn’t matter anymore?” - Geospatial Mapping

-

“AI can be dangerous if we let it run free, humans should always be there to check and correct.” - AI worker

Logically, each profession's relationship with AI seemed to reflect deeper questions about professional identity and purpose. Healthcare workers worried about accuracy because accuracy defines competence in their field. Tech workers worried about creativity because creative problem-solving is their core value proposition. Teachers worried about meaningful human connection because that's what makes education more than information transfer.

People are are using AI as a mirror, reflecting back their own understanding of what made their work worthwhile.

Question 5, 6, and 7: Decision Making, Trust and Training

When asked the role that workers should have in decisions about how AI is implemented, about 62% of respondents want shared decision-making over AI implementation, 15% want full authority, and fewer than 2% are comfortable with no input.

Most people somewhat trust their employers to implement AI to benefit workers - on a scale of 1 to 3, with 1 being no trust and 3 being full trust, most people chose 2 - they somewhat trust their employers. But a few industries were majority “no trust”. No industry voted “complete trust”.

This reflects most AI conversations. Most people who have been experimenting with AI understand their capabilities, have an element of excitement, but don’t necessarily trust it (and don’t really trust their employers). We trust it to do specific, bounded tasks that we can verify. We don't trust it to think for us.

That’s the difference between functional trust and existential trust. Functional trust is that more bounded, verifiable, instrumental task - like “I trust it to transcribe a meeting, summarize notes, or generate test code - things I can check.” Existential trust is ceding judgment or cognition. - like “I do not trust it to decide how I treat a patient, structure a contract, or grade a student’s essay.”

A few quotes from a variety of industries emphasize this, ranging in trust:

-

“If benefits of AI are shared with workers it can be helpful, but I don’t have much trust in tech CEOs to allow worker input into how it’s used. Unions will be necessary to ensure this.” - Tech worker

-

“Trust is a factor — who can trust a billionaire? AI might be good for pulling up planning lists or Excel functions, but when it comes to real jobs you need humans.” - Transportation worker

-

“People are far too trusting of AI, they seem to think it’s all knowing and that’s a greater issue in my opinion than any other since the management class looks at it as a replacement for people when in reality it’s at best a faulty assistant.” - Fintech

-

“I work in a technical job, and struggle a lot with being able to factually trust AI. My worry is that overzealous corporate people looking to reduce headcount will force it into areas it’s not good enough at yet, and it causes major problems.” - Automotive Engineer

And so the key to building out trust might be a combination of shared decision making and training. Despite most people being familiar with AI and most people using AI, most people are not trained on AI.

-

Only about 60% of workers have received AI training, and training rates are especially low in low-trust industries like Design/Creative (20% trained) and Entertainment/Film & TV (5% trained).

-

Compare that to technology (60% trained) and consulting (58% trained) and it becomes rather clear where that low trust might be coming from.

(Click on image to enlarge)

So one way to build trust in these tools could be providing training.

-

“I believe that AI can make most knowledge workers incredibly more efficient, like I am already experiencing in my day to day. I train my students not only to work effectively with AI, but also how to do so without decreasing other important skills which might get lost by using especially GenAI to execute “menial” tasks for them. - Physics, Machine Learning worker

-

My job is pretty safe from AI. But I worry that the world is hurtling towards this mindset of scarcity, where AI is a replacement for humans. We should instead bolster the workforce by training people to use AI. It’ll improve the workers quality of life, while setting up a company for growth. - Research/Development/Academia worker

-

“I really think training on information privacy and safety is most important. If we can keep information in a safe environment, then people can experiment, break stuff....in a safe way and learn how to implement for their own individual or department needs. But currently, I think line employees are loading "God knows what" into free AI models without understanding where the data is going.” - Finance worker

-

“The way to protect workers is by training and upskilling. You can’t force a company not to innovate — the government should promote upskilling and give incentives to companies that complete courses and programs.” - Tech worker

-

“We have to train people on the job — if they just rely on AI all the time, I worry about the level of learning happening. Mistakes will happen and most will burn out.” - Manufacturing worker

Question 8: Policy

When workers were asked what policies they actually wanted, the gap widened. In the open-text responses, three themes dominated:

-

Training & Upskilling Funds - calls for employers (and government) to invest in programs that help workers adapt.

-

Algorithmic Transparency - demands that AI systems be explainable and auditable, so workers understand how decisions are made.

-

Transition Guarantees & Safety Nets - requests for income supports, severance, or job placement if AI leads to displacement.

(Click on image to enlarge)

And people have requests, specifically around training and upskilling.

-

“Mandatory training on how to use AI for all staff to minimize the digital divide.” - Education worker

-

“Exceptional training on how AI tools work, how to use them effectively, and how not to use them.” - Manufacturing / Supply Chain worker

-

“I can’t believe I’m saying it, but mandatory trainings. AI adoption is happening, but without common understandings of uses/risks/benefits, gathering lessons learned, and without actionable ethical/privacy training.”- Government / Policy worker

They also want to see more transparency and oversight.

-

“Organizations that implement AI should be transparent in their decision-making processes, and the review process should be easy to access (for instance hiring or promotional decisions).” - Transportation / Logistics worker

-

“Radical transparency in the use of AI tools and algorithms to evaluate performance and worker efficiency.” - Tech worker

They would like to see attempts at protecting jobs or redistributing the gains from AI.

-

“No layoffs based on projections for ‘efficient’ use of AI; collaborative training on AI and collaborative development of practices, policies, and applications for AI.” - Consultant

-

“Severe tax punishments for companies who lay off workers to implement AI agents.” - Medical / Pharmaceutical worker

-

“Either an increase in salaries or maintaining salaries while reducing work hours. If AI delivers on the promise to streamline things and boost productivity, the workers should receive compensation rates to reflect that.” - Design / Creative worker

But overall, they want frameworks.

-

“Given the studies on how it is affecting critical thinking skills and the tremendous amount of it that is essentially stolen, I'd want to walk it back almost entirely and give policy-makers, workers, and scientists ample time to consider how it can be effectively deployed in the workforce ethically, without costing jobs.” - Finance worker

-

“Mandate a ‘Holistic Congruence Model and Measures for AI Adoption,’ including board-level corporate structures, security, data, ML/AI, and ecosystem maturity.” - Consultant

And the demands make sense as things continue to speed up. Kimi (another AI lab that performs quite well) mentioned in an interview recently that they were handling the wheel to their AI for training and processing. No more humans. This creates an environment where there isn’t really people input, it’s just the AI building upon itself. The implications are clear there. These policy suggestions will be examined more in a future piece.

Question 10: Unions

Only about 8% of respondents in this survey were union members, perhaps a reminder of how small organized labor’s footprint is in much of the economy today. It’s hard to pull any meaningful takeaways from a small sample. Some of their thoughts below:

-

As a Baltimore construction worker co-op rebuilding redlined neighborhoods, we actually think we are in a rare spot where AI can be used to empower marginalized workers and communities. - Construction Worker

-

It’s very surreal seeing how AI is playing out while working at a public high school. - Education Worker

-

While my employer is training us to use the AI, they are also setting unrealistic goals of task to be accomplished follow by a weekly report which is said to provide transparency but the data collected on our task / output doesn’t tell whole story just the story the department heads want to report. - Government Worker

-

I'm deeply concerned about the lack of Junior engineering jobs since the rise of AI. I think one issue that hasn't been discussed a lot is when skilled workers leave the workforce, there's going to be less and less of a talent pool with experience to pull from. I also feel like all types of workers are expected to be doing much more work with less resources, and in some cases, AI doesn't help to fix issues, but individuals are expected to go faster. - Transportation and Logistics

Question 11: Any Additional Thoughts

The most important part of the survey was the open-ended comment box at the end, which I’ve pulled from throughout this essay, where I'd asked people to share anything else about their AI experiences that the formal questions hadn't captured.

I got hundreds and hundreds mini-essays (thank you everyone!!) that read like dispatches from the front lines of a cultural transition. People were clearly hungry to talk about this stuff in more nuanced ways than public discourse (or their workplace) typically allows.

AI is forcing everyone to articulate why they work, what makes work meaningful, and what parts of human capability they most wanted to preserve and develop. AI is making us reckon with what it means to be human, in a way that not many of us have ever had to do.

What to Do Next?

The conventional narrative about AI and work focuses on displacement and efficiency: which jobs will be automated, how much productivity will increase, whether humans will become obsolete. But I think the real story happening is about people figuring out how to maintain their sense of purpose and agency.

Too many organizations are treating AI implementation as a purely technical challenge rather than a cultural transition. Too many workers are left to figure out AI integration on their own, without much institutional support or collective wisdom. Too much of our public discourse remains stuck in binary thinking about AI as either salvation or threat, missing the more complex reality of how people are actually living with these technologies.

People are remarkably thoughtful about these challenges when given the space to be. They're developing creative solutions and collaborative approaches that point toward more sustainable forms of human-AI integration. But there has to be leadership from government, companies, and the AI companies themselves. They have to (should?) listen to the needs of the people that are the users of this product.

Whatever is happening in offices and schools and hospitals and design studios across the country isn't the one the headlines describe fully. It’s not just revolution or ruin. It’s the messy middle, where people are building new rules of work in real time. This is about people figuring out how to remain fully human while working alongside forms of intelligence that are powerful, useful, and different from our own.

1 I am going to spend more time refining the results and am working with a policy group to help propose some of these policies to governments. This is not the end of this survey, as the results deserve analysis beyond a Substack!

2 Or because Nvidia says that growth is decelerating

3 GDP is going on the blockchain so maybe that bodes well for AI too

4 One big takeaway is that people were very excited to be asked AI. A lot of responses were grateful that someone was listening.

5 Maia Mindel noted that a metaphor people use here is “if there was a dog that could read the newspaper and got 80% of the stories right, it'd be a pretty cool thing for many things, but not for learning about the news”

6 Elon Musk won’t stop tweeting about Ani, one of Grok’s AI companions. MetaAI allowed users to make a bunch of bots like “Step Mom” and “Russian Girl” which is strange. The ‘slopgularity’ as Danielle Fong called it.

7 Anthony Lee Zhang has an interesting hypothesis about the relative failure of the GPT5 launch “GPT5 supports my "John Von Neumann" hypothesis: Superintelligence is impossible. The models will not substantively improve past this point. There is a physical limit to intelligence, which is essentially equal to John Von Neumann's IQ, which o3/o4 has more or less achieved”

More By This Author:

How AI, Healthcare, And Labubu Became The American Economy

Zero-Sum Thinking And The Labor Market

The Four Phases Of Institutional Collapse In The Age Of AI

Disclaimer: This is not financial advice or recommendation for any investment. The Content is for informational purposes only, you should not construe any such information or other material as legal, ...

more