AI: The Good, The Bad And The... Woah

Photo by Steve Johnson on Unsplash

The good news about AI is there will be productive uses. The bad news is it will take 5 to 10 years to sort the wheat from the chaff. According to a recent study by Morgan Stanley, it takes 14 years to reap the productivity gains from a typical New Tech adoption cycle. Morgan Stanley: AI Adoption Rate To Outpace Past Tech Cycles, But Measurable Economic Impact May Not Arrive Until Late Decade.

Richard Bonugli and I discuss the good and the bad in AI in our new podcast/video.

The adoption process isn't as smooth as promoters claim: MIT report: 95% of generative AI pilots at companies are failing.

The other bad news is the malicious uses of AI are already in full bloom, so while we're waiting around for AI tools to find use cases that actually increase productivity (as opposed to doing BS Work that has little to no real value), we'll have to deal with an ever-expanding onslaught of malicious uses of AI tools.

The Era of AI-Generated Ransomware Has Arrived (WIRED.com)

AI Is Being Weaponized For Cybercrime In 'Unprecedented' Ways, Researchers Warn (Zero Hedge)

The dark side of AI is not just an institutional issue; AI impacts individuals and families in ways that are difficult to predict, discern or control:

The family of teenager who died by suicide alleges OpenAI's ChatGPT is to blame (NBC News)

It's instructive to compare AI adoption with the Internet's adoption process. The most striking difference is the Internet 1.0 (late 1990s to early 2000s) was visibly beneficial and lacked its current capacity for malicious activity. In the Internet 1.0, we weren't inundated with spam, phishing, etc.--the systems needed for these plagues didn't exist.

In AI, it's the malicious uses that are expanding while the truly productive uses are lagging.

The hype claims AI is already universally productive, but this is more hype than reality. The truly productive use cases are customized and specific to narrow fields. In terms of general uses, AI Slop is the primary output, degrading legitimate content and polluting future AI scraping/training with inaccuracies, as recent research has found that AI scrapers favor AI-generated content: so with AI Slop, it's garbage in, garbage out, stretching to infinity.

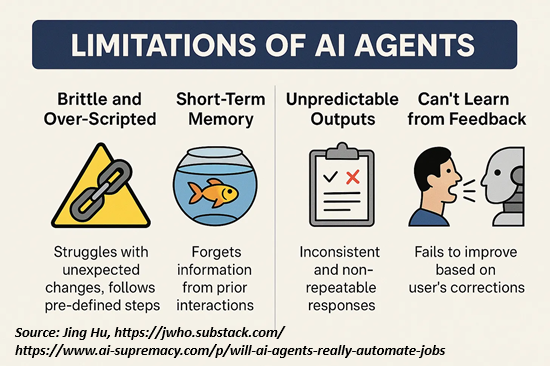

As the links below document, AI tools have inherent limits that impact their utility. The hype claims that scaling (adding more processors) will solve all these technical limits, but that isn't the case. The models are intrinsically limited, limits that can't be dissolved with a few coding tricks or more processing power.

So while we wait for truly productive specific applications of generative AI, we're at risk of being overwhelmed by the malicious uses which are already productive for the criminal class. The most pressing need now is for AI tools to protect us from AI tools.

Never mind the good and the bad--watch out for the Woah.

More By This Author:

AI Is A Mirror In Which We See Our Own Reflection

Go Ahead And Rage At Boomers, But The Problem Is The Entire Economic Order

Three Choices, None Good

Disclosures: None.